Anthony Evans / Digital Puppets UK

Hi, I’m Antony Evans, co-founder of Digital Puppets, an animation studio based in the UK. As a character designer with years of experience in real-time animation, I’ve collaborated with industry giants like Disney, Warner Bros, and the BBC. My expertise lies in creating custom characters and real-time avatar puppetry for a wide range of clients.

At Digital Puppets, we specialize in replicating existing licensed characters, designing company mascots, and producing motion capture characters for content creators, educators, and marketing agencies. Beyond character creation, we also develop fully animated shows.

One of our recent projects was a 50-episode animated series for the UK radio station RADIO X, where we transformed podcast hosts into engaging animated content for their online audience. We’ve also worked with major broadcasters like the BBC and Cartoon Network, though some projects remain undisclosed due to NDAs.

Concept & Character Creation

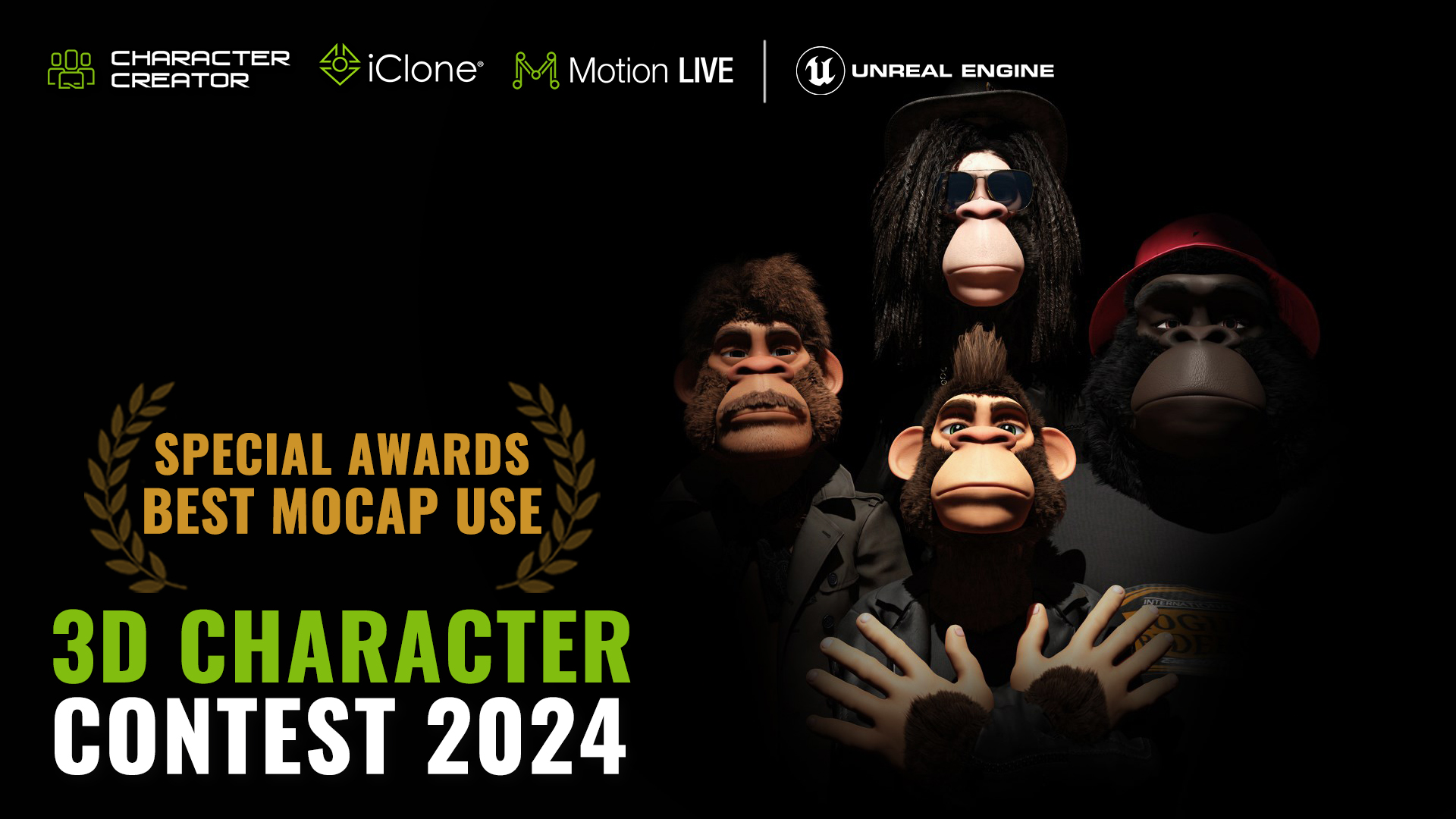

Last year, we submitted our “Monkey Band” animation to the Reallusion 2024 Character Contest, where it won the “Best Mocap Use” award in the Special Awards category. This project pushed us to explore new workflows and integrate innovative techniques into our existing pipeline.

The inspiration behind Monkey Band came from our desire to experiment with fur creation in Character Creator. Using monkeys allowed us to utilize the humanoid base mesh, giving us access to Character Creator’s clothing and hair systems. This approach helped us craft unique outfits and distinctive looks for each band member, adding depth to their personalities.

In this article, I’ll share our full creative process and the tools we used, including iClone and Unreal Engine, to bring Monkey Band to life.

The concept was inspired by our long-standing interest in virtual bands that can perform in real time. To achieve this, we ensured the characters were equipped with detailed facial morphs to maximize performance during live facial capture. The band was specifically designed to enable real-time virtual avatar control for the lead character, allowing us to stream pre-recorded performances seamlessly. You can see an example of this in action here

The characters were developed using a combination of Character Creator 4 (CC4) tools and ZBrush via the GoZ workflow. ZBrush was instrumental in adding intricate details to the base model and in sculpting facial morphs. Its capabilities in creating organic shapes allowed for rapid exploration of different looks, which greatly contributed to giving each band member a unique personality.

While designing the monkey characters, we utilized preset hair cards to create their fur. Specifically, the “Fur Collar” and “Fur Cuffs” presets from the “Stylings” pack in CC4 proved invaluable. By leveraging the Edit Mesh tool, we customized and duplicated these hair cards to achieve a full-body fur effect. For a more in-depth look at the process, you can refer to our video on customizing hair.

iClone Motion LIVE for Facial Animation

When utilizing Live Face for facial capture, it’s important to exaggerate expressions. Overacting ensures that subtle details are captured, as it’s easier to tone down exaggerated expressions during post-processing than to add missing nuances. Additionally, the quality of blend shapes is critical. Take time to review them in the Facial Profile Editor, ensuring they work well, particularly for stylized characters like the monkeys. Modifications to the base mesh for a stylized appearance can sometimes distort blend shapes, so proper setup is essential to achieve high-quality facial capture.

Facial mocap is highly efficient and allows for a quick turnaround. For this animation, I mimed the song while recording the performance using an iPhone and LIVE FACE. Afterward, I reviewed the recording and made adjustments to refine expressions and mouth shapes. Simultaneously, I recorded the audio track and used AccuLips to generate a viseme track.

By blending the viseme track with the expression track, I achieved more natural results. The viseme track ensures accurate mouth shapes for speech, while the expression track adds emotional nuance. This workflow significantly reduced the time required compared to manual facial animation.

iClone for Body Animation

For this project, we utilized the Xsens Link motion capture suit, which delivered highly accurate results with minimal cleanup. Each character’s movements were recorded in single takes, one after the other. The motion data was processed in Xsens MVN software and exported as FBX files. Importing the mocap data into iClone was seamless—simply drag and drop the FBX file onto the character and select the Xsens profile.

The primary cleanup involved aligning the characters’ hands with their musical instruments. For example, refining the hand placement for the keyboard took the most time. Using an actual keyboard during the mocap session would have been beneficial, as physical props provide clear points of contact and improve accuracy in hand positioning.

Here are some Dos and Don’ts when animating body motion in iClone:

DO:

-Exaggerate your movements to ensure the capture conveys dynamic motion.

-Use real-world props to add weight and realism, especially for interactions like playing instruments.

-Allow sufficient space for natural movement during capture.

DON’T:

-Avoid miming contact with imaginary objects; it’s challenging to replicate consistent positioning without real props.

-Don’t neglect refining hand and finger alignment during cleanup, especially for detailed tasks like playing instruments.

**Final Render in Unreal**

The workflow between **iClone** and **Unreal Engine** is incredibly efficient, especially with the **Auto Setup plugin**. This tool streamlines the animation transfer process, allowing us to move characters seamlessly into Unreal while preserving materials without the need for manual adjustments. For this project, all animations were crafted in **iClone** and transferred to a **pre-built stage scene in Unreal** using **Live Link**. Once in Unreal, we focused on **camera setup and lighting adjustments** to refine the final visuals.

The **Unreal Sequencer** is intuitive, functioning similarly to traditional timeline tools. One of **iClone’s Live Link** standout features is its ability to **automatically configure the Sequencer** during animation transfers. With four characters in play, this feature **significantly reduced setup time**, allowing us to focus on refining the overall performance.

Unreal Engine was the perfect choice for handling **large-scale environments** and **real-time rendering**. While iClone’s rendering capabilities were solid, Unreal provided access to **advanced stage assets, pre-configured particle effects, and dynamic lighting**, allowing us to integrate animations seamlessly and achieve a professional-quality final render with minimal setup.

Additionally, Unreal’s real-time capabilities made it **ideal for a live performance setup**. By connecting the **Xsens suit**, we enabled real-time interactions—such as the lead singer engaging with the audience—while also triggering **pre-animated sequences**, like musical performances, with a single button press. Unreal’s **real-time triggers** made it possible for a single operator to control an entire virtual band performance effortlessly.

—

### **Closing Thoughts**

Working on the **“Monkey Band”** project alongside my brother **Scott** has been an incredibly rewarding experience. At **Digital Puppets UK**, we constantly strive to **push the limits of real-time animation and virtual avatars**. By leveraging tools like **iClone, Character Creator, and Unreal Engine**, we’ve streamlined the creation process—allowing us to develop detailed characters and lifelike animations with remarkable efficiency.

Blending **motion capture with real-time performances** has allowed us to **transform simple ideas into polished, high-quality animations** that truly resonate with audiences. Looking back at projects like **animating RADIO X’s podcast** and **collaborations with broadcasters like the BBC and Cartoon Network**, we’ve refined our workflow to consistently deliver top-tier content.

The **“Monkey Band”** project is a testament to how cutting-edge tools and workflows can bring a **virtual band to life**. As technology continues to evolve, we’re excited to see how these innovations **reshape the future of virtual production**—and we’re proud to be at the forefront of this journey.

To wrap things up, what better way to end than with more **Monkey Band fun** for your entertainment?

### **Related Posts**

Click below to explore the **winners’ portfolios** and judge feedback!

**Like this post? Share your thoughts below!**